Supervision: Konstantinos Pitas

Project type:

Semester project (master)

Master thesis

Finished

Project Description

How much information have deep neural network weights memorized after training? One way of answering this question is by adding as much noise as possible to the network weights without hurting the training accuracy [1].

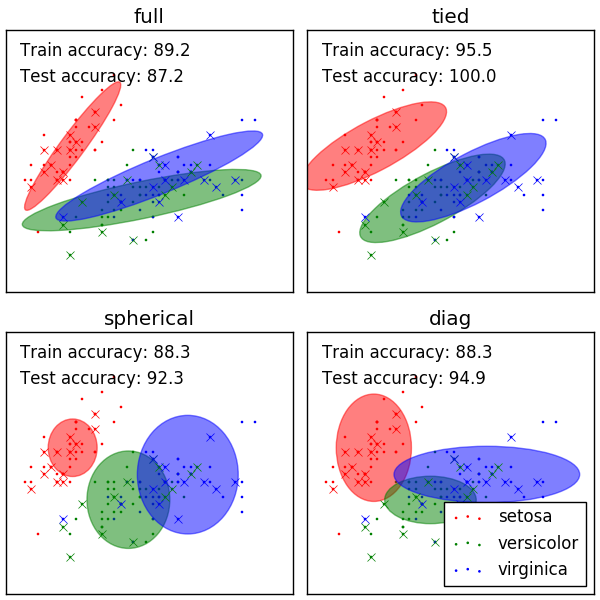

For simple noise such as Gaussian with diagonal covariance, optimising how much noise to add can be done in a number of ways [2]. More complex noise distributions give more flexibility --we can add more noise to less important parameters and less noise to more important ones, but the corresponding optimization problems are hard.

Project Goals

In this project the student will work on computing lower bounds to the amount of information contained in the weights of a trained deep neural networks for complicated noise distributions, such as Gaussians with full or block diagonal covariance, or the von Mises distribution [3] which is directional.

Prerequisites

The student should be highly motivated and should have good knowledge of Tensorflow/Keras and/or Pytorch. Ideally the student should have experience in working with large architectures such as VGG-16.

The project is 20% theory and 80% application.

This is a master or semester project.

Contact Contact me by email at konstantinos.pitas@epfl.ch or pass by ELE 227 for a quick discussion.

[1] Where is the information in a deep neural network? https://arxiv.org/abs/1905.12213

[2] Variational Dropout and the Local Reparameterization Trick https://papers.nips.cc/paper/5666-variational-dropout-and-the-local-reparameterization-trick.pdf

[3] Radial and Directional Posteriors for Bayesian Neural Networks https://arxiv.org/abs/1902.02603